How Nimble AI Delivered a Tamper-Proof Biometric Platform for a Pharmaceutical Innovator in Under 5 Months

Introduction

In regulated industries such as life sciences and healthcare, the verifiability of data is as critical as the data itself. Pharmaceutical and clinical research organizations must meet stringent regulatory standards for auditability, data lineage, and security—particularly when dealing with biometric telemetry in drug trials. The technical requirements for these systems go beyond conventional data ingestion pipelines. They must assure that data, once recorded, remains immutable, independently verifiable, and operationally observable throughout its lifecycle.

A pharmaceutical startup engaged Nimble AI Services to design and implement a telemetry ingestion platform capable of meeting these requirements. The platform needed to handle high-frequency biometric data from distributed patient devices while enabling real-time anomaly detection, long-term auditability, and full compliance with frameworks such as FDA 21 CFR Part 11, HIPAA, and GDPR. The engagement required not only a robust and secure architecture, but also a rapid delivery timeline—with a production deployment targeted in under five months.

Connect with Nimble AI Services to learn how we can accelerate your next project involving secure, auditable, and high-throughput data infrastructure.

Business Challenge

The client was preparing to launch a clinical study that involved deploying biometric sensors to over one hundred patients. These devices transmitted health vitals—including metrics such as heart rate, oxygen saturation, and blood pressure—at regular ten-second intervals. This high-throughput environment required a fault-tolerant and horizontally scalable architecture that could ensure continuous data capture, while simultaneously validating the authenticity of each incoming record.

Beyond operational scale, the platform had to guarantee that once data was written, it could not be altered or deleted—even by privileged users. Data integrity needed to be provable using cryptographic methods. Furthermore, any anomalies in record sequencing, timestamps, or update behavior had to be automatically detected and flagged in real-time.

The solution would also need to support a layered security model, maintain a separation of concerns across microservices, and enable audit-friendly interfaces for internal and external stakeholders.

Solution Overview

Nimble AI Services architected a modern, event-driven platform grounded in microservices and powered by immudb, an open-source immutable database that provides cryptographic verifiability for all writes. The system was designed to operate with complete traceability from ingestion to audit, and to provide a resilient foundation for scale, compliance, and observability.

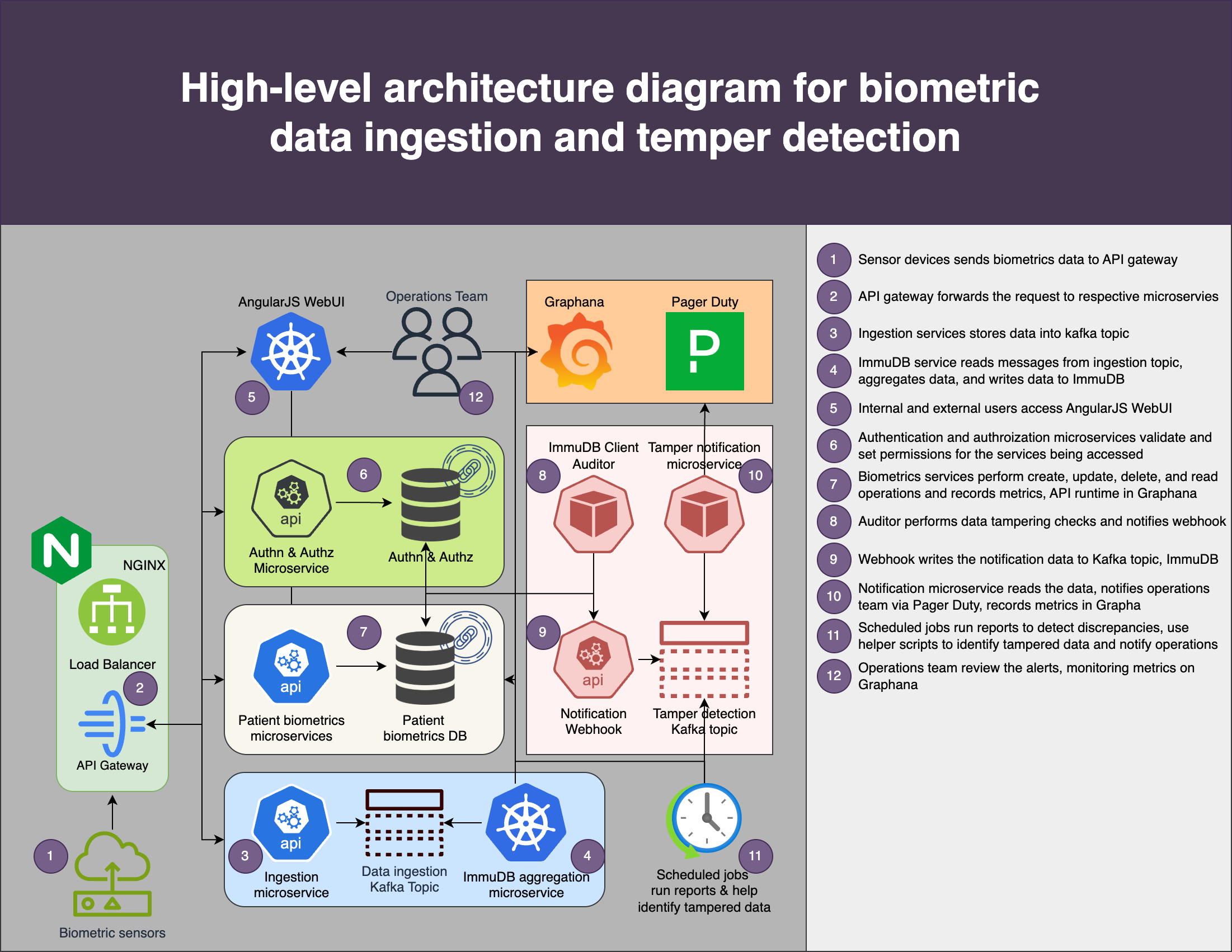

Biometric devices transmitted data to a centralized API Gateway, which handled request routing and load balancing. Requests were then validated and forwarded to dedicated ingestion microservices, which published records to a Kafka-backed message queue. This messaging layer served as a decoupled, persistent stream capable of supporting concurrent downstream consumers.

A dedicated aggregation microservice consumed messages from Kafka, applied interval-based transformations, and committed the cleaned, structured records to immudb. Each write was cryptographically hashed and chained using Merkle tree structures to ensure tamper-evidence.

Authentication and authorization services were implemented as independent microservices, responsible for validating device credentials and enforcing access policies. All API interactions, operational metrics, and system events were forwarded to Grafana, providing real-time dashboards for performance monitoring and anomaly visualization.

Tamper Detection and Auditing

To supplement immudb’s append-only ledger and integrity guarantees, the platform incorporated a client-mode immudb auditor, which continuously verified the state of the ledger against a trusted hash history. If discrepancies were detected—such as sequence violations or corrupted entries—the auditor would trigger a webhook notification.

This webhook was routed to both immudb and a Kafka topic dedicated to security events. A separate notification microservice monitored this channel and issued incident alerts to the operations team via PagerDuty, while simultaneously logging the anomaly to Grafana for investigation.

In addition to real-time monitoring, the system scheduled daily audit jobs that ran integrity checks across the full dataset. These jobs compared historical write patterns, calculated deltas, and cross-referenced expected versus actual state changes to detect subtle forms of data tampering or system drift.

Reusability and Evaluation Strategy

A key architectural decision involved decoupling ingestion from storage through the use of Kafka. This enabled the implementation of parallel consumers that could process the same telemetry stream and write to different database backends. During the evaluation phase, the client leveraged this capability to benchmark PostgreSQL, Dolt, and immudb side-by-side using identical workloads.

Metrics such as ingestion throughput, query latency, and API response times were collected and analyzed using Grafana, allowing the client to make data-driven decisions about future platform investments. This pattern remains extensible and supports ongoing technology evaluations without requiring changes to the ingestion pipeline.

Business outcomes

As a result of this engagement, the client successfully deployed a platform that was:

Delivered within five months, aligned with clinical study timelines and regulatory review milestones

Cryptographically tamper-evident, using FIPS 140-2 compliant immudb for immutable record storage

Operationally resilient, with multi-AZ and regionally replicated infrastructure for high availability

Fully observable, with integrated metrics, logs, and dashboards using Grafana

Extensible and modular, supporting simultaneous database backends for performance evaluation

Compliant with HIPAA, GDPR, and FDA 21 CFR Part 11, with automated auditing and anomaly detection

Conclusion

The implementation of this telemetry ingestion and tamper detection platform demonstrates Nimble AI’s ability to execute complex, regulated system builds under aggressive timelines without compromising on reliability, traceability, or compliance. Through the application of immutable data patterns, microservices engineering, and real-time observability, the platform not only addressed immediate clinical trial requirements but also positioned the client with a scalable foundation for long-term data trust.

Organizations seeking to implement audit-grade infrastructure for telemetry, financial, or regulated workloads can benefit from Nimble AI’s proven methodologies and delivery discipline.